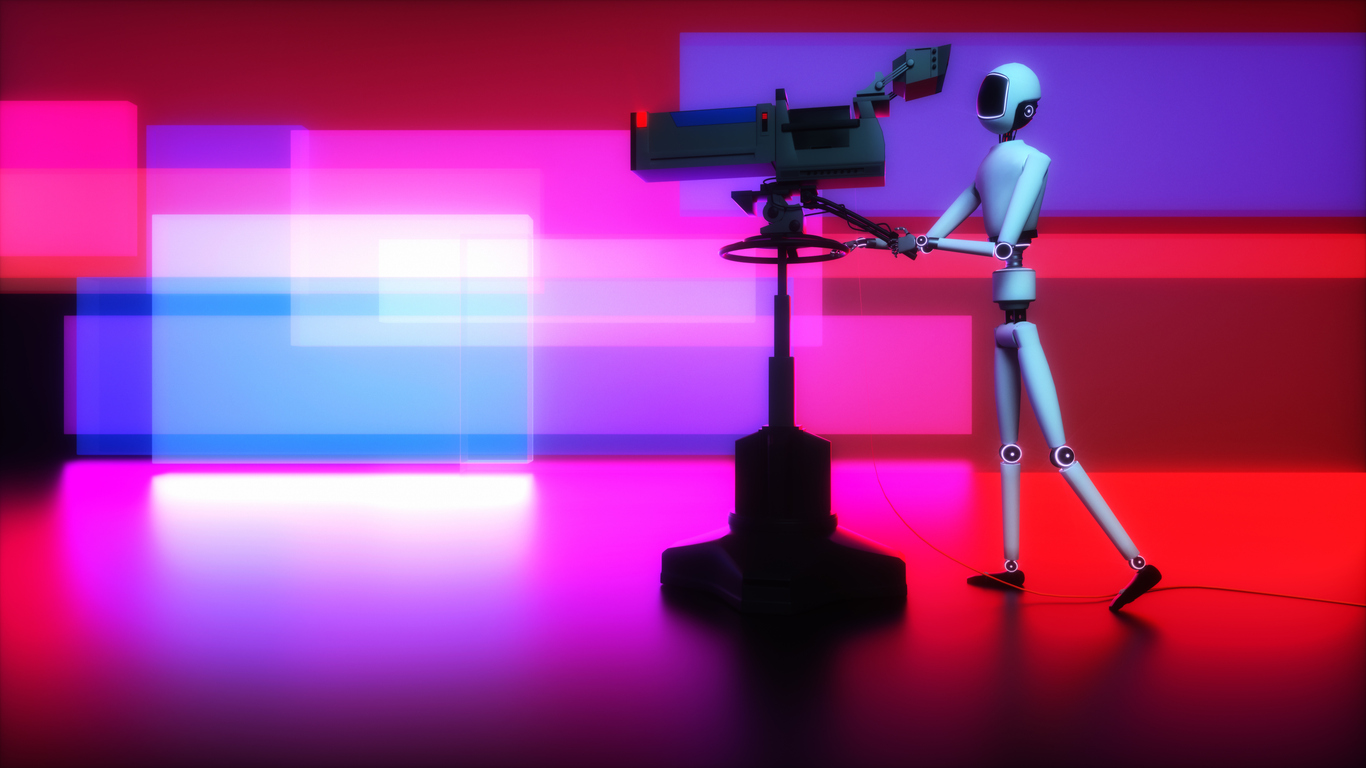

The future of video storytelling in an AI-driven world

Ready or not, we’re hurtling toward a world replete with AI-generated video.

AI-generated video often ignites more trepidation about misinformation and deepfakes than optimism about its potential applications, but in either case, communicators will need to familiarize themselves with this medium to prepare for the future. After all, there’s a great deal of potential to be unlocked, as well as pitfalls on the road ahead.

Ragan asked AI experts to look into their crystal GPTs and share what they foresee for the future of video storytelling and how communicators can prepare.

What’s ahead

Given the increasing ubiquity of AI-generated text and images, there’s no doubt that AI will also continue to suffuse all types of video in the coming months and years.

“In the coming years, AI will become the foundation for the vast majority of video storytelling because it will save companies so much time and money,” said Stephanie Nivinskus, CEO at SizzleForce Marketing. And as a consequence: “The ability for humans to discern between videos created with AI versus. without it will become increasingly more difficult as the technology improves.”

Steve Mudd, CEO of Talentless AI, also foresees AI lowering current barriers for entry into video content creation — an extension of an ongoing trend, as platforms such as TikTok and Instagram Reels have simplified and reduced costs and time for social video creation for the average user.

“With synthetic media, in the time it takes to create one video, you can set yourself up to create dozens,” Mudd said. “For any business that relies on a consistent stream of video content, this can be a gamechanger, eliminating the gaps that you often see in social when people run out of content.”

David Quiñones, VP of editorial and content at RockOrange, is wary of what’s next, but thinks it will have benefits and downsides. “Hopefully, the biggest impact AI will have is in democratizing the space, breaking down the expensive barriers of entry and making the only limits for independent creatives the edges of their imaginations,” he said. “This will likely be ‘disruptive,’ but frankly the larger corporate purveyors of content — music labels, film studios, streaming services and network television — could use a firm shakeup.”

The future is (more or less) here

So far, AI-generated video has largely been found in the realms of deepfakes, AI influencers and one-off stunts, but some brands and organizations are dipping their toes into more practical applications.

Nivinskus pointed out that AI video software company Synthesia, for example, has a video testimonial from Heineken, which has worked with the software. She also expects to see video experimentation from Arizona State University, which partnered with OpenAI to shape an AI-generated future for higher education.

Mudd noted that Perplexity’s daily podcast uses synthetic voices, another application that may be infused into more video storytelling in the future.

But it’s not all sunshine and roses ahead.

During a recent presentation at Ragan’s recent Social Media Conference, Quiñones pointed out the use of AI tool Stable Diffusion to create the opening credits for Disney+’s show “Secret Invasion.” The social media backlash was swift and fierce, with fans angered at what was perceived as a lazy move that replaced human creativity with AI.

He suggests experimentation, tempered by caution — and perhaps waiting for others to trip up first. “As a content professional who also works in crisis management and strategy, I urge my partners not to be on the front line of adopting AI video content,” he said.

Transparency is the way forward

As usual, ethics and copyright are major factors that will shape the flow of AI-generated storytelling. The industry and legislators have a great deal of work to do.

“Synthetic media companies have become a lot more proactive about guardrails in their systems to prevent the unauthorized replication of people’s likenesses” — that is, deepfakes, Mudd noted. “Not knowing what data is being used to train models is casting a pall over the technology and its applications. Big Tech needs to deliver a lot more clarity than they have been.”

This murkiness around sourcing has already resulted in major lawsuits, such as the New York Times’ action against OpenAI.

The key for communicators now: Be honest about what you’re trying and the tools you’re using.

“AI is still a divisive concept for wide swaths of the population,” Quiñones said. “The biggest pitfall is in transparency, or more specifically the lack of it. If you’re showing people something created by AI, you have to tell them so or run the risk of eroding trust.”

There’s also the fact that AI-generated video is still often afflicted by the uncanny valley — “the imperfections found in videos that feature humans,” Nivinskus said. “For example, human eyes are incredibly expressive. In real life, we’ve all seen a skeptic narrow their eyes, an annoyed person roll their eyes, and a surprised person widen their eyes. Most AI-generated videos at this point create humans void of natural eye movements and other distinctly human traits.”

Quiñones sees the “wrongness” of AI-generated video as a benefit for humanity. “Hundreds of thousands of years later, this collective socio-emotional response might just be a saving grace as fully AI-generated content, no matter how well prompted and considered, is simply not satisfying,” he said.

Ready, set

Nivinskus and Mudd advised communicators to learn the basics of common software for AI-generated videos — including Synthesia, HeyGen, Eleven Labs, pika.art and twisty.ai and runwayml.com — by watching video tutorials about how they work. Quiñones also suggested trying out Coursera’s beginner courses.

“Get your hands dirty and start using the software yourself, make mistakes, and in doing so, learn how to do things you never thought possible,” Nivinskus said.

The Ragan editorial team has also tested tools such as Lumen5 and Pictory for converting articles to video. (Stay tuned.)

“Until you’ve actually played with the tools, you won’t really understand,” Mudd said. “The paradigm has shifted. This is not about adapting your old understanding to the new world. This is about throwing out everything you know and embracing a whole new paradigm.”

Learn more about the vast applications and tactics for shaping an AI-fueled comms strategy—as well as cautionary tales—at Ragan’s 2024 Future of Communications Conference, Nov. 13-15 in Austin, Texas, and at Comms Week events around the world.

Jess Zafarris is the director of content at Ragan and PR Daily, and an author, editor and social media strategist.